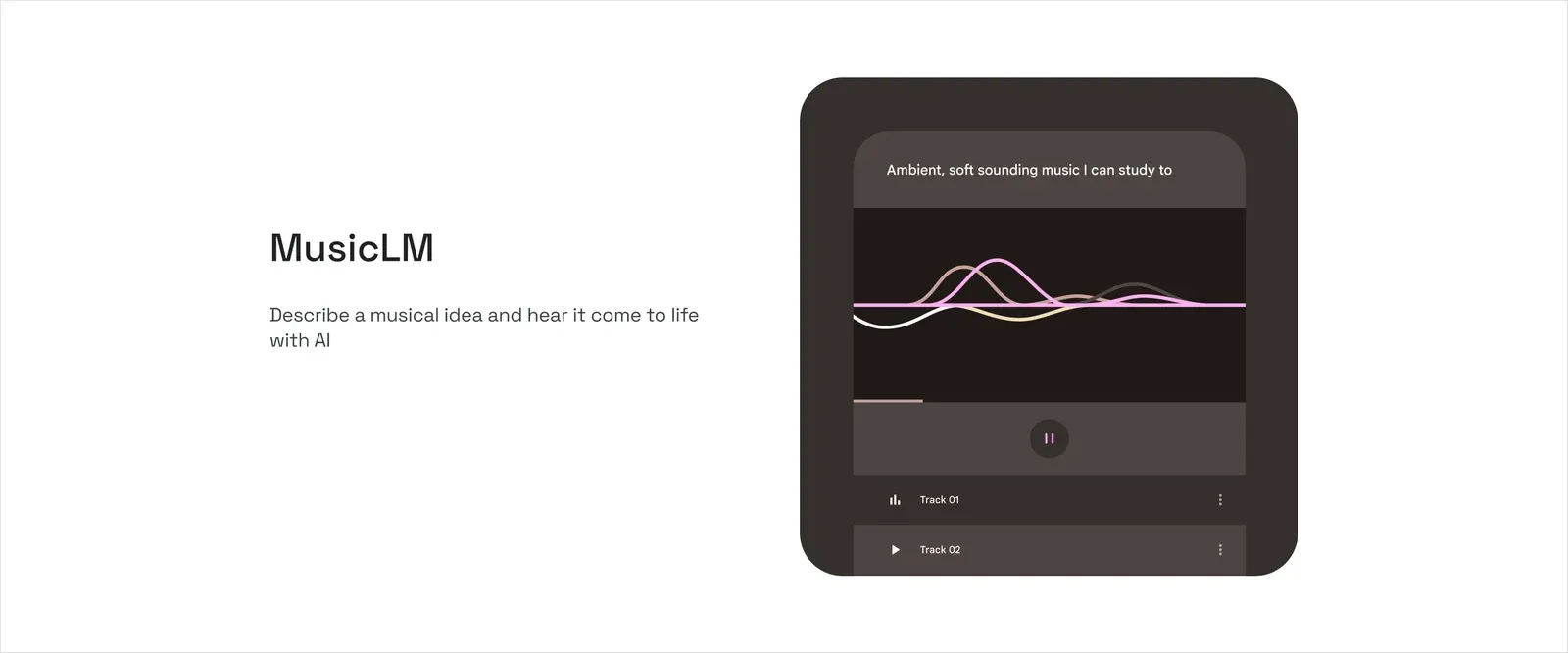

MusicLM is a model that can generate high-fidelity music from text descriptions. MusicLM is a research project by Google that aims to explore the possibilities of conditional music generation using a hierarchical sequence-to-sequence approach.

MusicLM consists of two main components: a text encoder and an audio decoder. The text encoder takes a text description as input and produces a sequence of semantic tokens that represent the musical content and style. The audio decoder then takes these semantic tokens and generates raw audio waveforms at 24 kHz using a neural audio codec.

MusicLM can generate music that is consistent with the text description over several minutes, and it can also handle multiple modalities of input. For example, MusicLM can generate music based on both text and a melody, by transforming whistled or hummed melodies according to the style described in the text.

To demonstrate the capabilities of MusicLM, Google has released MusicCaps, a dataset of 5.5k music-text pairs with rich text descriptions provided by human experts. You can listen to some examples of MusicLM's output on their website, where you can also find more details about the model architecture and training.

MusicLM is an exciting step towards generating music from natural language, and it opens up new possibilities for creative expression and musical exploration. If you are interested in learning more about MusicLM, you can check out their paper or their GitHub repository.

Member discussion